The Modern Data Platform – challenge 3 – SLOW PERFORMANCE. Part One

The Modern Data Platform, Challenge 3, Part 1…

In the first part of this blog series I discussed the traditional data warehouse and some of the issues that can occur in the period following the initial project.

I concluded by outlining the 5 common challenges that I hear from customers.

- INFLEXIBILITY

- COMPLEX ARCHITECTURE

- SLOW PERFORMANCE

- OLD TECHNOLOGY

- LACK OF GOVERNANCE

This blog will focus on the third of these issues, SLOW PERFORMANCE.

How many times have you heard someone say “I don’t like that it’s too slow, can it be faster?”

If you are anything like me then that question is a red rag to a bull. As this post will attempt to show, it is dangerously easy to make broad brush statements about performance in relation to data warehouses and Business Intelligence solutions.

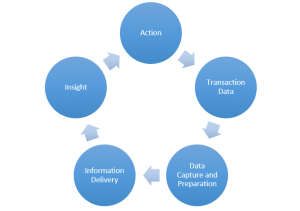

In an earlier post I described the information value chain, how a good Business Intelligence solution should take raw ingredients and end up with insight that can be easily actioned.

In reality this value chain is a careful construction of a number of different components or operations that individually perform specific functions that in themselves may be deemed to perform in a satisfactory manner.

So what do we mean by slow performance?

Let’s think about the experience of eating out in a restaurant, there are a number of distinct steps that are taken between the menu being decided, you placing your order, and then finally (hopefully) receiving your food and feeling satisfied.

Clearly some of these activities should be completed before you decide what you want to eat, and therefore have no bearing on how long it takes between you placing your order and eating your food. However, there are also a number of variables that can impact on the wait time.

In an earlier blog I covered issues relating to INFLEXIBILITY in established data architectures. The bulk of these issues, to apply the kitchen analogy, are directly related to the Pre-Order stage. In other words, changes to ingredients, the menu and individual recipes. When we talk about SLOW PERFORMANCE of data warehouses then we often think about 2 distinct stages.

- The time it takes for the data to be ready to be queried– BACKEND (DATA PREP)

- The time it takes for the data to be queried – FRONTEND (DATA CONSUMPTION)

This post will dig deeper into the causes of each of these issues and discuss the traditional solutions for addressing / mitigating their effect.

BACKEND / DATA PREP ISSUES

It is easy to focus discussions regarding performance on the preparation stage onwards. The order is in and the clock is ticking. The cooking process begins and any delays and issues introduced are immediately felt downstream. However, there is one critical issue that can occur before we begin to cook the meal / prepare our data for consumption. What if we have the wrong ingredients, no ingredients or they arrive too late!

Source to Staging

A common place to find performance issues in established data warehouse environments is in the extraction of data from the source systems, i.e. the process of getting quality ingredients on the kitchen shelf. Data Quality will be covered in a later post focused on Governance within Data Warehouses, so we will skip past that for the moment. Assuming that your source system is still available and that the data structures have not changed, then what can negatively impact this performance and keep you waiting?

- Degradation in extract times due to source system transactional load – your ingredient supplier is too busy to get to your kitchen in time, you will have to wait longer.

- Growth in source system data volumes – your supplier carries way more stock than before and it is taking too long to pick your delivery.

- Growth in extract volumes – you are asking for larger deliveries and the supplier or indeed your goods receipt team cannot cope.

Not only can these issues have an appreciable effect on the time it takes for the ingredients (source data) to arrive at the back door of the kitchen (data warehouse). They also impact on the amount of time it takes to move them into the kitchen storage (data warehouse staging area) itself.

In some cases, an explosion in volumes may lead to the kitchen no longer being capable of storing the ingredients which can of course severely limit the ability to service customers.

When dealing with new data arriving for your data warehouse, it is essential that you employ delta loads to ensure you only get the fresh data you need. Data that is new or has changed. The kitchen equivalent of ensuring you only receive more of the ingredients that you actually need rather than a full delivery of everything every day.

Staging to Presentation

This is where the main preparation and cooking happens, also invariably this is where a number of incremental performance issues can have a seismic effect. A common architectural mistake is to not look at the ETL process as a value chain, this often results in an ETL process that resembles a loose collection of semi-related tasks rather than an optimal and lean end to end process. The kitchen equivalent of a group of chefs operating independently without orchestration or leadership, not as a cohesive unit.

Common ETL processes should be standardised, using repeatable steps to lower maintenance and management costs. If you have common ingredients that are required across multiple recipes then why not clean and prepare them in one go, in advance. Don’t duplicate effort.

Unnecessary duplication of data can also be a real problem, the culinary equivalent of taking multiple sets of ingredients to the workspace for every dish. A decision that not only introduces overheads to the individual activity but can have knock on effects further down the process chain. In a traditional data warehouse, working with large data sets takes time as does copying them, ignoring the immediate issues around the disk space they take! Traditional dimensionally modelled data warehouses often slow down considerably during the joins to the dimension tables that are used to assign key allocations within the fact tables. These often rely on large complex queries that can be constrained by the raw processing and IO speed of the database server, in other words, how fast your chefs can perform complex functions.

In Staging to Preparation there are two factors that contribute to poor performance more than anything else other:

- Costly data read and write activities – shifting data back and forth from slow disks

- Inefficient processes – whether hand coded or using an ETL tool, there is often unnecessary duplication of effort and minimal re-use

The end result is that the traditional data warehouse is often only prepared and made available overnight during an increasingly pressurised batch window. It cannot be overstated how many times I hear complaints from customers about scheduled overnight reports that have been delayed due to failed or late running data warehouse loads. Team members who silently pray before checking their email at 06:00 dreading the error report from their data warehouse. Even if your process currently fits within your nightly batch window, it shouldn’t be hard to appreciate that in today’s fast moving business environment it is not too healthy to only be able to make decisions based on the position at the end of yesterday. You wouldn’t want your meal prepared the day before using old ingredients would you?

To find out more detail on the topic of SLOW PERFORMANCE, read the upcoming blog on Thursday 01/09/2016. We will start looking into frontend data consumption.

The Modern Data Platform delivers on all the requirements for a next generation data warehouse. Enabling organisations to radically simplify their existing legacy or overly complex solutions in order to lower running costs, improve agility and deliver real business value. Remember to follow the rest of the posts in this blog series where we will explore in detail the 3 remaining common challenges of traditional data warehousing. To find out how the Modern Data Platform addresses the challenge of slow performance, download our webinar recodring.